History of HPC at UEA

The history of HPC at UEA stretches back to the mid 1990s.

The HPC provision has always been specified to be a general purpose facility for research that requires compute intensive resources. It has been used to meet a wide variety of research needs within our Science faculties, and is now attracting users from across the University from the Medical School to Economics.

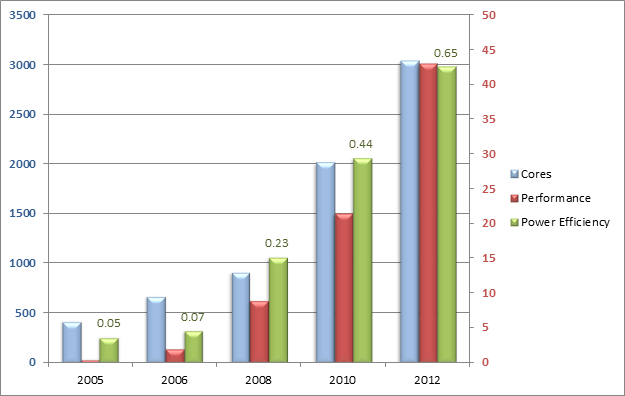

Over the years HPC provision has been through several iterations. It has grown from a handful of Unix workstations serving a dozen applications using less than 1Gb RAM, and 100Gb storage to the Linux cluster of today with over 3000 CPU cores, with over 6Tb or RAM and more than 100Tb of storage, providing approximately 350 applications.

)